随着微服务和云原生的服务越来越大众化的,越来越多的客户想向容器化发展,今天小编就结合最近看的k8s文章在云上手动搭建k8s高可用集群,为大家讲述手动搭建步骤和搭建过程中踩的坑。小编这次是用阿里云服务器搭建,话不多说现在开始:

一、准备环境

因为我们这次是搭建高可用集群,所以小编采用了7台2核8G的云服务器,列表如下:

| 主机名 | 系统版本 | docker版本 | 网络划分 | keepalived | flannel | 配置 | 备注 |

|---|---|---|---|---|---|---|---|

| Master01 | centos 7.8 | 18.09.9 | 192.168.1.11 | v1.3.5 | v0.11.0 | 2核8G | 控制节点 |

| Master02 | centos 7.8 | 18.09.9 | 192.168.1.12 | v1.3.5 | v0.11.0 | 2核8G | 控制节点 |

| Master03 | centos 7.8 | 18.09.9 | 192.168.1.13 | v1.3.5 | v0.11.0 | 2核8G | 控制节点 |

| VIP | centos 7.8 | 18.09.9 | 192.168.1.10 | v1.3.5 | v0.11.0 | 2核8G | 控制浮动ip |

| Work01 | centos 7.8 | 18.09.9 | 192.168.1.14 | - | - | 2核8G | node节点 |

| Work02 | centos 7.8 | 18.09.9 | 192.168.1.15 | - | - | 2核8G | node节点 |

| Work03 | centos 7.8 | 18.09.9 | 192.168.1.16 | - | - | 2核8G | node节点 |

| Client | centos 7.8 | - | 192.168.1.17 | - | - | 2核8G | 客户端 |

共有7台服务器,3台master,3台work,1台client。

安装k8s 版本:

| 主机名 | kubelet | kubeadm | kubectl | 备注 |

|---|---|---|---|---|

| master01 | v1.16.4 | v1.16.4 | v1.16.4 | kubectl选装 |

| master02 | v1.16.4 | v1.16.4 | v1.16.4 | kubectl选装 |

| master03 | v1.16.4 | v1.16.4 | v1.16.4 | kubectl选装 |

| work01 | v1.16.4 | v1.16.4 | v1.16.4 | kubectl选装 |

| work02 | v1.16.4 | v1.16.4 | v1.16.4 | kubectl选装 |

| work03 | v1.16.4 | v1.16.4 | v1.16.4 | kubectl选装 |

| Client | - | - | v1.16.4 | Client |

二、图形架构及组件描述

本采用kubeadm方式搭建高可用k8s集群,k8s集群的高可用实际是k8s各核心组件的高可用,这里使用主备模式,架构如下:

主备模式高可用架构说明:

| 核心组件 | 高可用模式 | 高可用实现方式 |

|---|---|---|

| apiserver | 主备 | keepalived |

| controller-manager | 主备 | leader election |

| scheduler | 主备 | leader election |

| etcd | 集群 | kubeadm |

apiserver 通过keepalived实现高可用,当某个节点故障时触发keepalived vip 转移;

controller-manager k8s内部通过选举方式产生领导者(由–leader-elect 选型控制,默认为true),同一时刻集群内只有一个controller-manager组件运行;

scheduler k8s内部通过选举方式产生领导者(由–leader-elect 选型控制,默认为true),同一时刻集群内只有一个scheduler组件运行;

etcd 通过运行kubeadm方式自动创建集群来实现高可用,部署的节点数为奇数,3节点方式最多容忍一台机器宕机。

三,配置部署环境

在集群所有节点都执行本部分操作。关闭所有服务器防火墙。

配置主机名

1.1 修改主机名

[root@aliyun ~]# hostnamectl set-hostname master01 [root@aliyun ~]# hostname master01 [root@aliyun ~]# hostname master01

退出重新登陆即可显示新设置的主机名master01

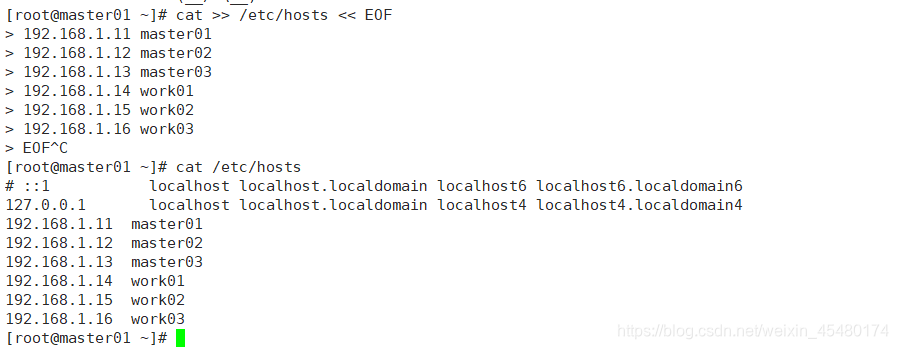

1.2 修改hosts文件

[root@master01 ~]# cat >> /etc/hosts << EOF 192.168.1.11 master01 192.168.1.12 master02 192.168.1.13 master03 192.168.1.14 work01 192.168.1.15 work02 192.168.1.16 work03 EOF

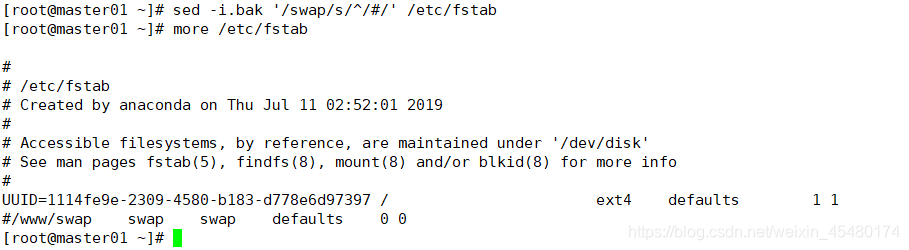

2. 关闭swap交换分区

2.1 临时关闭swap

[root@master01 ~]# swapoff -a

3.2 永久生效

如果需要永久生效,在命令行禁用swap后还需修改配置文件/etc/fstab,用#注释swap

[root@master01 ~]# sed -i.bak '/swap/s/^/#/' /etc/fstab

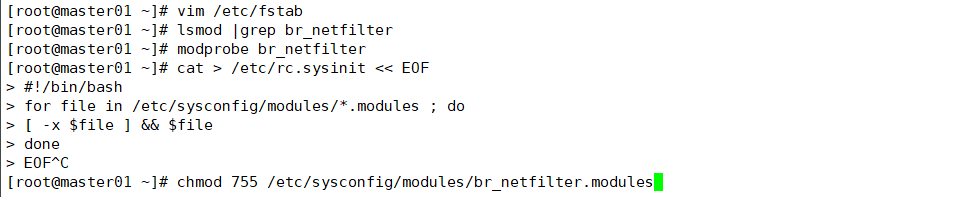

3. 内核参数修改

此次我们安装k8s网络使用flannel,网络需要设置内核参数bridge-nf-call-iptables=1,修改这个参数需要系统有br_netfilter模块。

3.1 br_netfilter模块加载

查看br_netfilter模块:

[root@master01 ~]# lsmod |grep br_netfilter

如果系统没有br_netfilter模块则执行下面的新增命令,如有则忽略。

在命令行新增br_netfilter模块,该方式重启后会失效

[root@master01 ~]# modprobe br_netfilter

永久新增br_netfilter模块,永久生效

[root@master01 ~]# cat > /etc/rc.sysinit << EOF #!/bin/bash for file in /etc/sysconfig/modules/*.modules ; do [ -x $file ] && $file done EOF [root@master01 ~]# cat > /etc/sysconfig/modules/br_netfilter.modules << EOF modprobe br_netfilter EOF [root@master01 ~]# chmod 755 /etc/sysconfig/modules/br_netfilter.modules

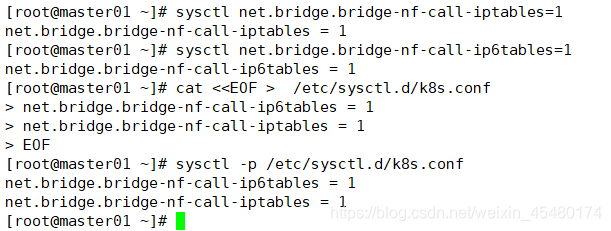

3.2 临时修改内核参数

[root@master01 ~]# sysctl net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-iptables = 1 [root@master01 ~]# sysctl net.bridge.bridge-nf-call-ip6tables=1 net.bridge.bridge-nf-call-ip6tables = 1

3.3 永久修改内核参数

[root@master01 ~]# cat <<EOF >/etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF [root@master01 ~]# sysctl -p /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1

4. 设置kubernetes的yum源

4.1 新增kubernetes源

[root@master01 ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

[ ] 中括号中的是repository id,唯一,用来标识不同仓库 name 仓库名称,自定义 baseurl 仓库地址 enable

是否启用该仓库,默认为1表示启用 gpgcheck 是否验证从该仓库获得程序包的合法性,1为验证 repo_gpgcheck

是否验证元数据的合法性 元数据就是程序包列表,1为验证 gpgkey=URL

数字签名的公钥文件所在位置,如果gpgcheck值为1,此处就需要指定gpgkey文件的位置,如果gpgcheck值为0就不需要此项了

4.2 更新yum缓存

[root@master01 ~]# yum clean all [root@master01 ~]# yum -y makecache

5.设置免密登录

配置控制节点之间的免密登录,此步骤只在控制节点master01执行

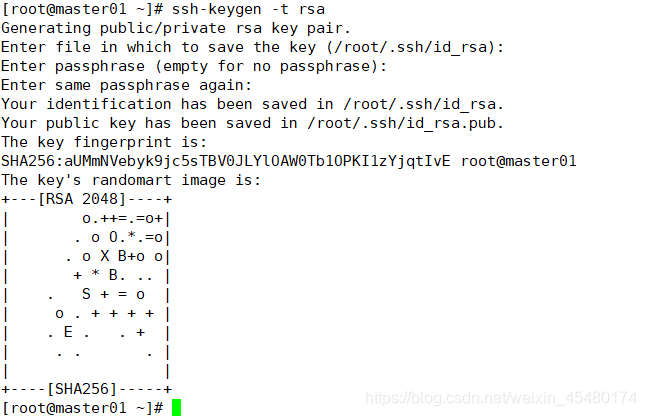

6.1 创建秘钥

[root@master01 ~]# ssh-keygen -t rsa

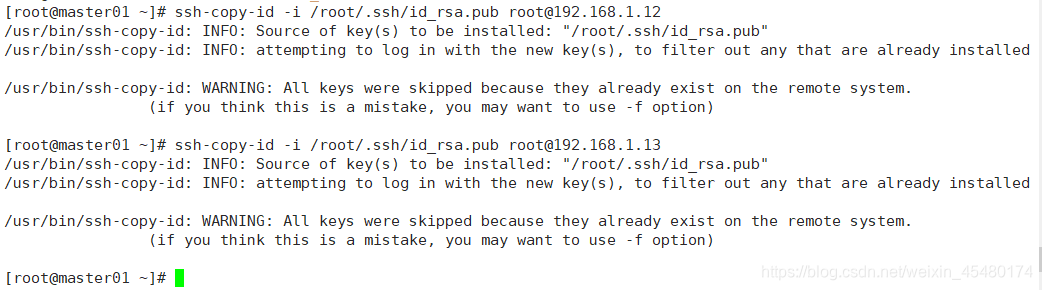

6.2 将控制节点密钥同步至其他控制节点

[root@master01 ~]# ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.1.12 [root@master01 ~]# ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.1.13

5.3 免密登陆测试

[root@master01 ~]# ssh 192.168.1.12 [root@master01 ~]# ssh master03

经过测试,master01可以直接登录master02和master03,不需要输入密码。

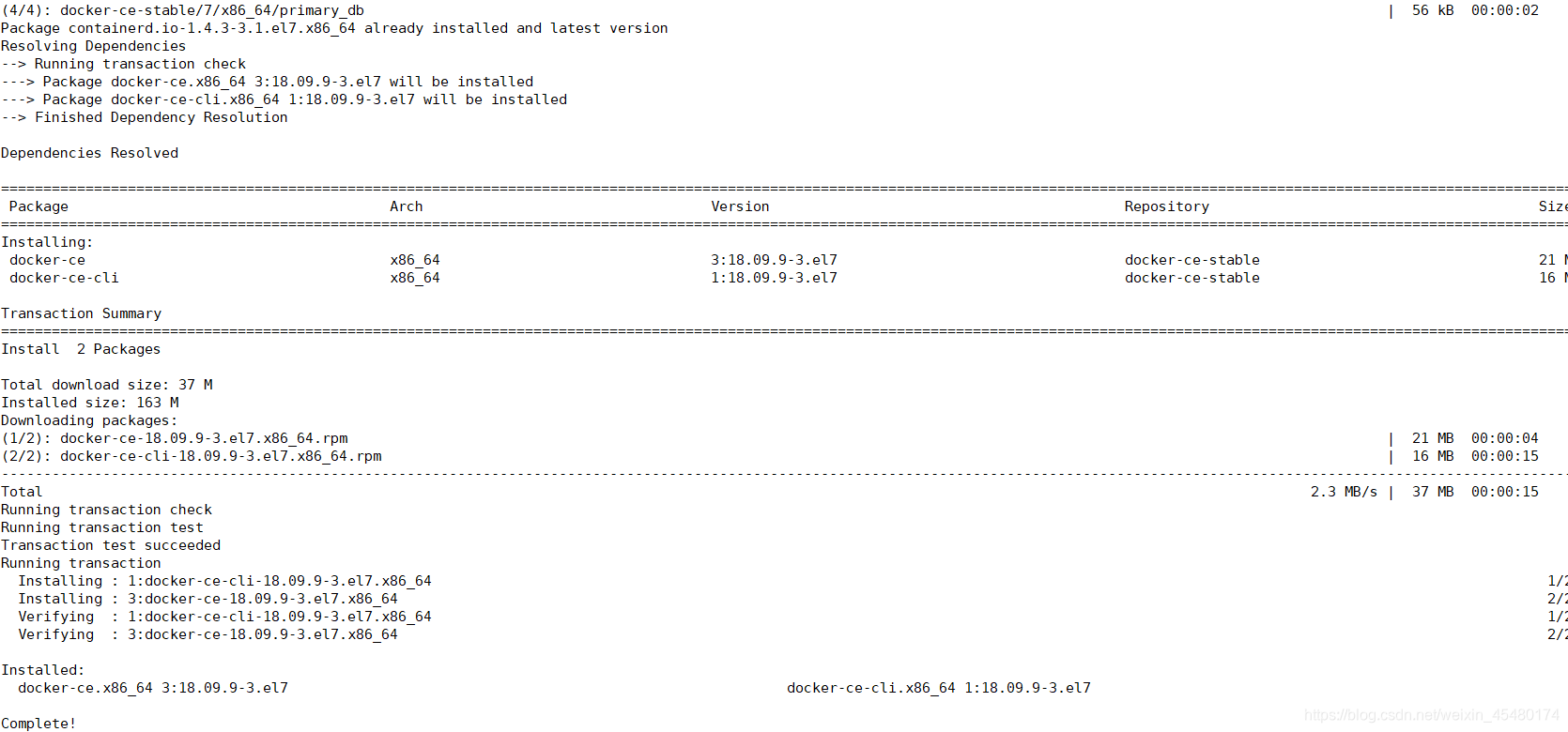

四、安装Docker服务

所有节点都执行本部分操作。

安装依赖包

[root@master01 ~]# yum install -y yum-utils device-mapper-persistent-data lvm2 [root@master01 ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo [root@master01 ~]# yum install epel-release -y [root@master01 ~]# yum install container-selinux -y

如果安装时候显示报错缺少container-selinux,则执行wget 更换yum 源操作,如果没有忽略wget等操作

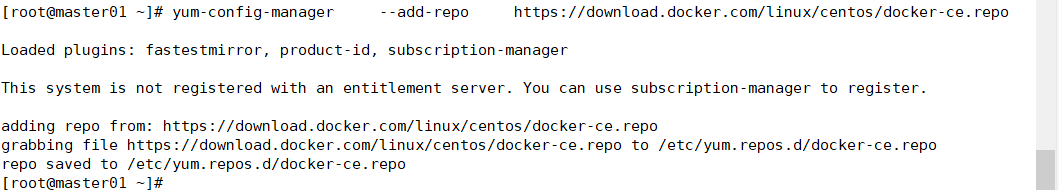

配置Docker安装源

[root@master01 ~]# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

安装Docker CE

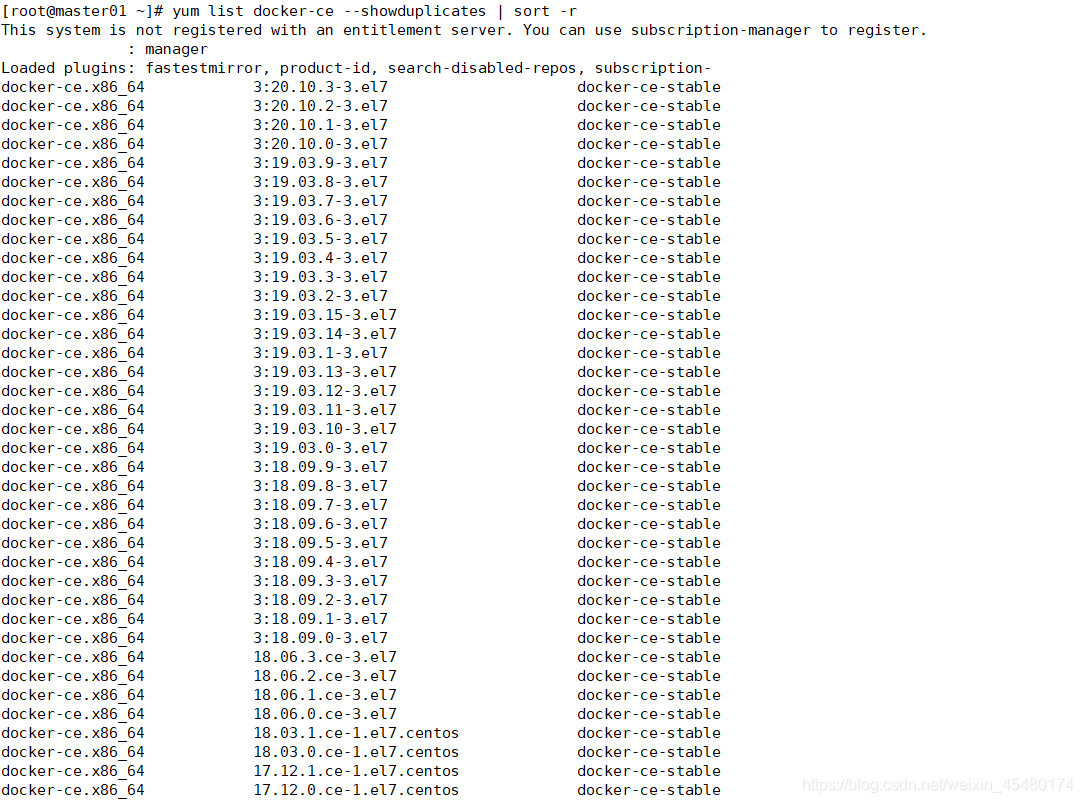

安装的docker版本为本文指定18.09.9

3.1 docker安装版本查看

[root@master01 ~]# yum list docker-ce --showduplicates | sort -r

3.2 安装docker

[root@master01 ~]# yum install docker-ce-18.09.9 docker-ce-cli-18.09.9 containerd.io -y

出现图上字样为安装成功!

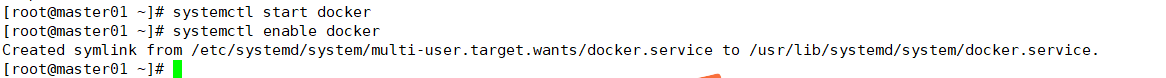

启动Docker

[root@master01 ~]# systemctl start docker [root@master01 ~]# systemctl enable docker

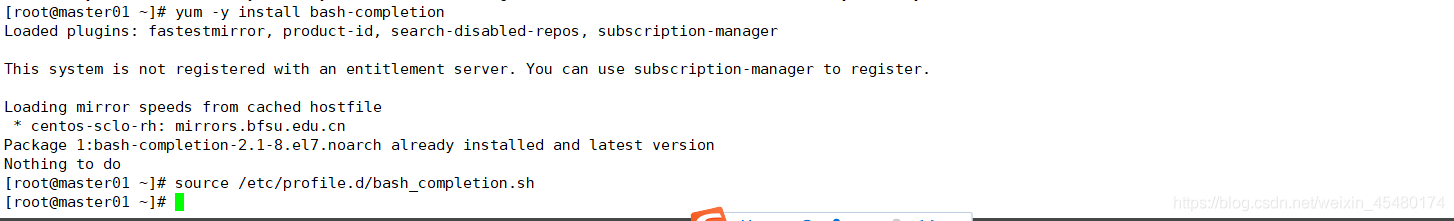

安装命令补全

安装bash-completion并加载

[root@master01 ~]# yum -y install bash-completion [root@master01 ~]# source /etc/profile.d/bash_completion.sh

配置镜像加速

由于Docker Hub的服务器在国外,我们默认下载镜像会比较慢,不过我们可以配置镜像加速器。目前国内主要的加速器有:Docker官方提供的中国registry mirror、阿里云加速器、DaoCloud 加速器,我们以配置阿里加速器为例。

首先登录阿里云的容器镜像服务,登陆地址为:https://cr.console.aliyun.com ,如果您没有阿里云账号可以注册一个,也可以通过联系我进行注册,购买服务有优惠

登录到容器镜像服务,选择镜像工具中的镜像加速器,地域以服务器为准,然后根据图中文档配置daemon.json文件

[root@master01 ~]# sudo mkdir -p /etc/docker

[root@master01 ~]# sudo tee /etc/docker/daemon.json <<-'EOF'{

"registry-mirrors": ["https://p2gz2n40.mirror.aliyuncs.com"]}

EOF

[root@master01 ~]# sudo systemctl daemon-reload

[root@master01 ~]# sudo systemctl restart docker

加速器配置完成

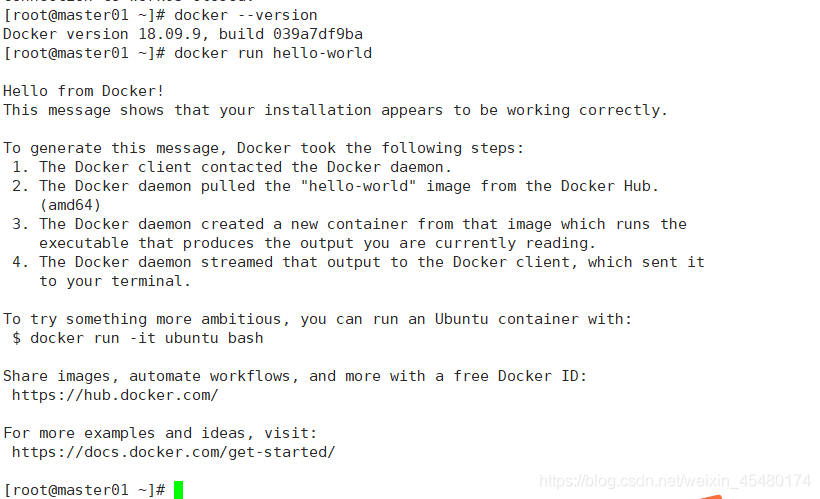

验证配置

[root@master01 ~]# docker --version [root@master01 ~]# docker run hello-world

我们通过查询docker版本和运行容器hello-world来验证docker是否安装成功。

配置修改Cgroup Driver

如果我们不修改Cgroup driver配置,加载时则会出现以下告警:

[WARNING Is Docker Systemd Check]: detected “cgroupfs” as the Docker cgroup driver. The recommended driver is “systemd”. Please follow the guide at https://kubernetes.io/docs/setup/cri/

修改daemon.json,添加一行"exec-opts": [“native.cgroupdriver=systemd”]

[root@master01 ~]# cat /etc/docker/daemon.json {

"registry-mirrors": ["https://p2gz2n40.mirror.aliyuncs.com"],

#注意尾部逗号,否则则会报错

"exec-opts": ["native.cgroupdriver=systemd"]}

配置完成重新加载docker

[root@master01 ~]# systemctl daemon-reload [root@master01 ~]# systemctl restart docker

五、安装配置keepalived服务

控制节点均执行本部分操作。

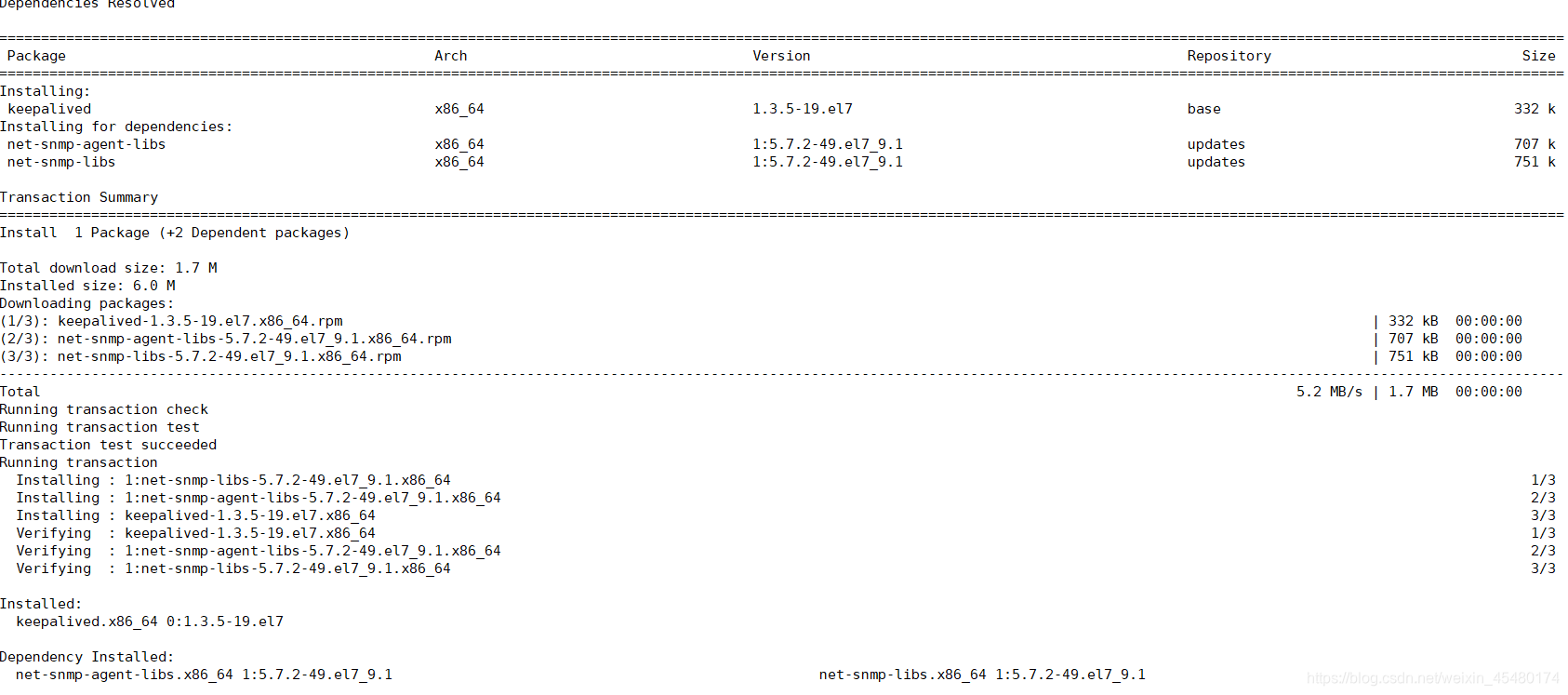

安装keepalived并修改配置

[root@master01 ~]# yum -y install keepalived

master01上keepalived配置:

[root@master01 ~]# more /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id master01

}

vrrp_instance VI_1 {

state MASTER

interface eth0 #改成本地网卡名称

virtual_router_id 50

priority 100 #优先级1-100,数字越大优先级越高

advert_int 1

authentication {

auth_type PASS

auth_pass 1111 }

virtual_ipaddress {

192.168.1.10 #填写VIP的ip

}}

master02上keepalived配置:

[root@master02 ~]# more /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id master02

}

vrrp_instance VI_1 {

state BACKUP

interface eth0 #改成本地网卡名称

virtual_router_id 50

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111 }

virtual_ipaddress {

192.168.1.10 #填写VIP的ip

}}

master03上keepalived配置:

[root@master03 ~]# more /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id master03

}

vrrp_instance VI_1 {

state BACKUP

interface eth0 #改成本地网卡名称

virtual_router_id 50

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111 }

virtual_ipaddress {

192.168.1.10 #填写VIP的ip

}

启动keepalived

所有control plane启动keepalived服务并设置开机启动

[root@master01 ~]# systemctl start keepalived [root@master01 ~]# systemctl enable keepalived

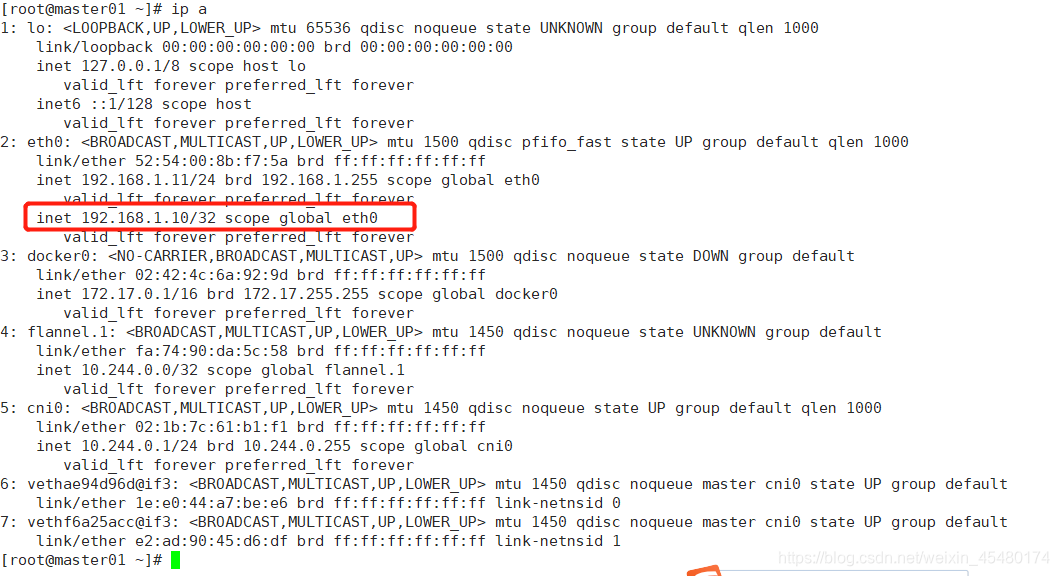

查看VIP设置

在master01上

[root@master01 ~]# ip a

六、k8s安装

集群所有节点都执行本部分操作。

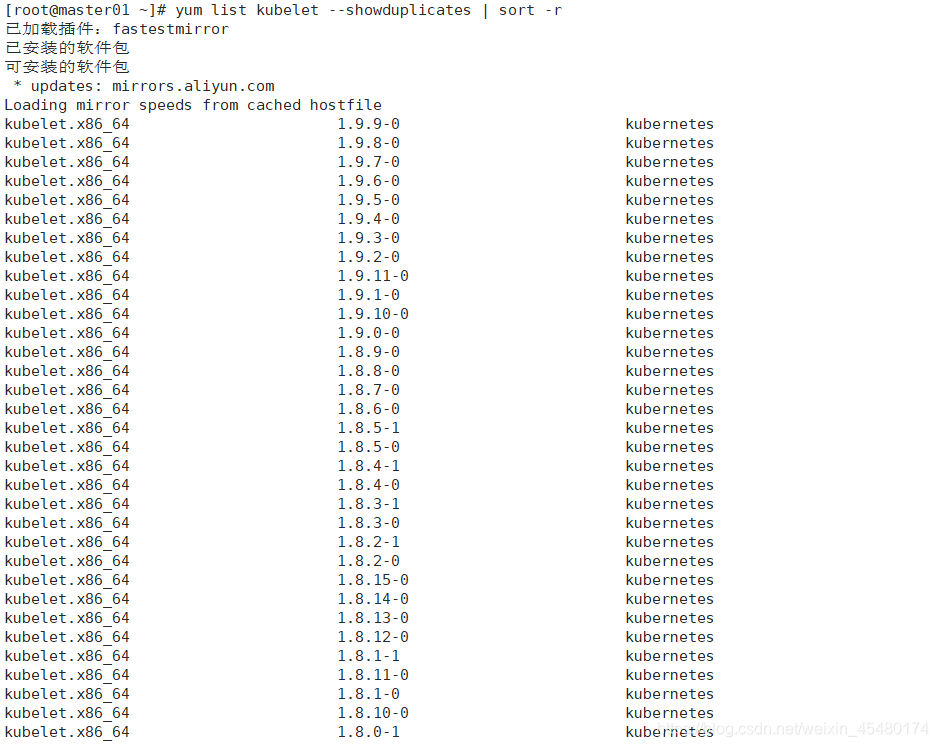

1.安装kubelet、kubeadm和kubectl

首先查看支持版本

[root@master01 ~]# yum list kubelet --showduplicates | sort -r

我们安装的kubelet版本是1.16.4,这个版本支持的docker版本为1.13.1, 17.03, 17.06, 17.09, 18.06, 18.09。

1.1 安装这三个软件

[root@master01 ~]# yum install -y kubelet-1.16.4 kubeadm-1.16.4 kubectl-1.16.4

说明 :

kubelet 运行在集群所有节点上,用于启动Pod和容器等对象的工具

kubeadm 用于初始化集群,启动集群的命令工具

kubectl 用于和集群通信的命令行,通过kubectl可以部署和管理应用,查看各种资源,创建、删除和更新各种组件

1.2 启动kubelet并配置kubectl命令补全

启动kubelet服务并设置开机启动

[root@master01 ~]# systemctl enable kubelet && systemctl start kubelet 配置kubectl命令补全 [root@master01 ~]# echo "source <(kubectl completion bash)" >> ~/.bash_profile [root@master01 ~]# source .bash_profile

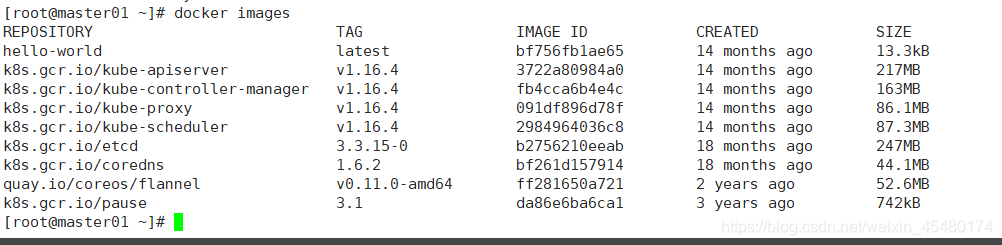

2.下载容器镜像

2.1 镜像下载的脚本

k8s是谷歌开发的。所以Kubernetes几乎所有的安装组件和Docker镜像都放在goolge自己的网站上,直接访问谷歌网站可能会有网络问题,我们的解决办法是从阿里云镜像仓库下载镜像,拉取到本地以后改回默认的镜像tag标签。我们可以通过运行image.sh脚本方式拉取镜像。

[root@master01 ~]# cat crimages.sh

#!/bin/bash

url=registry.cn-hangzhou.aliyuncs.com/loong576

version=v1.16.4

images=(`kubeadm config images list --kubernetes-version=$version|awk -F '/' '{print $2}'`)

for imagename in ${images[@]} ; do

docker pull $url/$imagename

docker tag $url/$imagename k8s.gcr.io/$imagename

docker rmi -f $url/$imagenamedone

url为阿里云镜像仓库地址,version为安装的kubernetes版本。

3.2 下载镜像

运行脚本crimages.sh,下载指定版本的镜像

[root@master01 ~]# ./crimages.sh [root@master01 ~]# docker images

七、初始化Master

只在控制节点master01节点执行本部分操作。

1.初始化的配置文件kubeadm.conf

[root@master01 ~]# cat kubeadm-conf.yaml apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration kubernetesVersion: v1.16.4 apiServer: certSANs: #填写所有kube-apiserver节点的hostname、IP、VIP - master01 - master02 - master03 - work01 - work02 - work03 - 192.168.1.11 - 192.168.1.12 - 192.168.1.13 - 192.168.1.14 - 192.168.1.15 - 192.168.1.16 - 192.168.1.10 controlPlaneEndpoint: "192.168.1.10:6443" networking: podSubnet: "10.244.0.0/16"

初始化master

[root@master01 ~]# kubeadm init --config=kubeadm-conf.yaml

记录kubeadm join命令的输出,后面需要这个命令将work节点和其他control plane节点加入集群中。

You can now join any number of control-plane nodes by copying certificate authorities and service account keys on each node and then running the following as root: kubeadm join 192.168.1.10:6443 --token f2h6fb.iinbxucou1qpwliv \ --discovery-token-ca-cert-hash sha256:51808557ef1284ba6f6727a086fb25c803b64806ded0c9873aa92e5ce3775795\ --control-plane Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.1.10:6443 --token f2h6fb.iinbxucou1qpwliv \ --discovery-token-ca-cert-hash sha256:51808557ef1284ba6f6727a086fb25c803b64806ded0c9873aa92e5ce3775795

初始化失败:

如果初始化失败,可执行kubeadm reset后重新初始化

[root@master01 ~]# kubeadm reset [root@master01 ~]# rm -rf $HOME/.kube/config

2.加载环境变量

[root@master01 ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile [root@master01 ~]# source .bash_profile

我们的所有操作都在root用户下执行,若为非root用户,则执行如下操作:

mkdir -p $HOME/.kubecp -i /etc/kubernetes/admin.conf $HOME/.kube/configchown $(id -u):$(id -g) $HOME/.kube/config

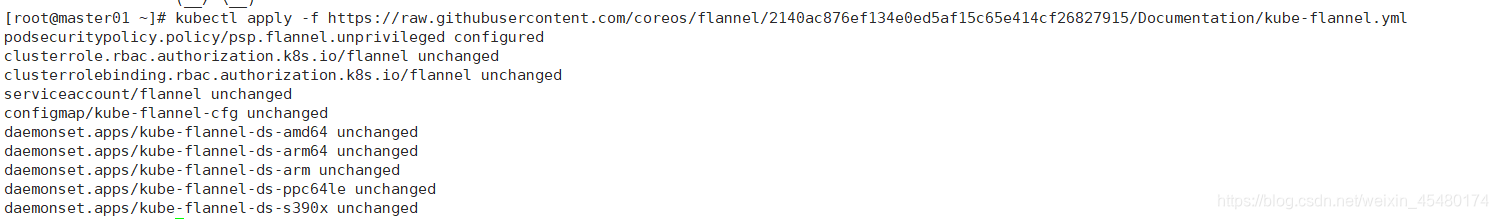

3.安装flannel网络

在master01上新建flannel网络

[root@master01 ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/2140ac876ef134e0ed5af15c65e414cf26827915/Documentation/kube-flannel.yml

由于网络原因,可能会安装失败,可以先直接下载kube-flannel.yml文件,然后再执行apply

八、将控制节点control plane加入集群

1.证书分发

master01分发证书:

在master01上运行脚本cert-main-master.sh,将证书分发至master02和master03

[root@master01 ~]# ll | grep cert-main-master.sh

-rwxr--r-- 1 root root 638 3月 1 17:07 cert-main-master.sh

[root@master01 ~]# cat cert-main-master.sh

USER=root

# customizableCONTROL_PLANE_IPS="192.168.1.12 192.168.1.13"

for host in ${CONTROL_PLANE_IPS}; do

scp /etc/kubernetes/pki/ca.crt "${USER}"@$host:

scp /etc/kubernetes/pki/ca.key "${USER}"@$host:

scp /etc/kubernetes/pki/sa.key "${USER}"@$host:

scp /etc/kubernetes/pki/sa.pub "${USER}"@$host:

scp /etc/kubernetes/pki/front-proxy-ca.crt "${USER}"@$host:

scp /etc/kubernetes/pki/front-proxy-ca.key "${USER}"@$host:

scp /etc/kubernetes/pki/etcd/ca.crt "${USER}"@$host:etcd-ca.crt

# Quote this line if you are using external etcd

scp /etc/kubernetes/pki/etcd/ca.key "${USER}"@$host:etcd-ca.key

done

master02移动证书至指定目录:

在master02上运行脚本cert-other-master.sh,将证书移至指定目录

[root@master02 ~]# pwd/root[root@master02 ~]# ll|grep cert-other-master.sh

-rwxr--r-- 1 root root 484 3月 1 17:11 cert-other-master.sh

[root@master02 ~]# more cert-other-master.sh

USER=root

# customizable

mkdir -p /etc/kubernetes/pki/etcd

mv /${USER}/ca.crt /etc/kubernetes/pki/

mv /${USER}/ca.key /etc/kubernetes/pki/

mv /${USER}/sa.pub /etc/kubernetes/pki/

mv /${USER}/sa.key /etc/kubernetes/pki/

mv /${USER}/front-proxy-ca.crt /etc/kubernetes/pki/

mv /${USER}/front-proxy-ca.key /etc/kubernetes/pki/

mv /${USER}/etcd-ca.crt /etc/kubernetes/pki/etcd/ca.crt

# Quote this line if you are using external etcd

mv /${USER}/etcd-ca.key /etc/kubernetes/pki/etcd/ca.key

[root@master02 ~]# ./cert-other-master.sh

master03移动证书至指定目录:

在master03上也运行脚本cert-other-master.sh

[root@master03 ~]# pwd /root [root@master03 ~]# ll|grep cert-other-master.sh -rwxr--r-- 1 root root 484 3月 1 17:11 cert-other-master.sh [root@master03 ~]# ./cert-other-master.sh

2.master02,master03加入集群

在master02、master03运行初始化master生成的控制节点加入集群的命令

kubeadm join 192.168.1.10:6443 --token f2h6fb.iinbxucou1qpwliv\ --discovery-token-ca-cert-hash sha256:51808557ef1284ba6f6727a086fb25c803b64806ded0c9873aa92e5ce3775795\ --control-plane

3.在master02和master03加载环境变量

[root@master02 ~]# scp master01:/etc/kubernetes/admin.conf /etc/kubernetes/ [root@master02 ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile [root@master02 ~]# source .bash_profile [root@master03 ~]# scp master01:/etc/kubernetes/admin.conf /etc/kubernetes/ [root@master03 ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile [root@master03 ~]# source .bash_profile

该步操作是为了在master02和master03上也能执行kubectl命令。

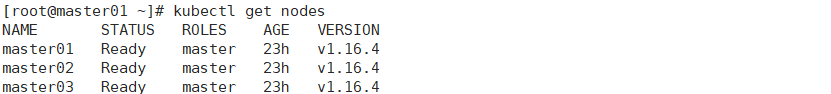

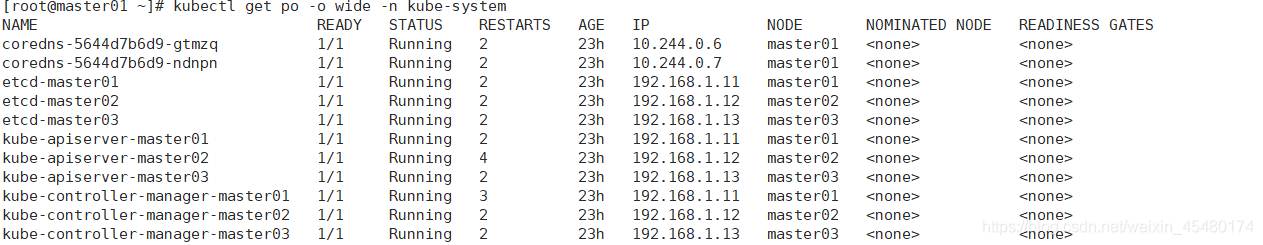

4. 集群节点查看

[root@master01 ~]# kubectl get nodes [root@master01 ~]# kubectl get po -o wide -n kube-system

查看所有控制节点处于ready状态,所有的系统组件也正常。

九、work节点加入集群

1.在所有work执行以下kubeadm join输出命令

kubeadm join 192.168.1.10:6443 --token f2h6fb.iinbxucou1qpwliv

–discovery-token-ca-cert-hash sha256:51808557ef1284ba6f6727a086fb25c803b64806ded0c9873aa92e5ce3775795

运行初始化master生成的work节点加入集群的命令

2. 集群节点查看

[root@master01 ~]# kubectl get nodesNAME STATUS ROLES AGE VERSION master01 Ready master 23h v1.16.4 master02 Ready master 23h v1.16.4 master03 Ready master 23h v1.16.4 work01 Ready <none> 23h v1.16.4 work02 Ready <none> 23h v1.16.4 work03 Ready <none> 23h v1.16.4

十、配置client

1.配置kubernetes源并安装kubectl

1.1 新增kubernetes源

[root@client ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

1.2 更新缓存

[root@client ~]# yum clean all [root@client ~]# yum -y makecache

1.3. 安装kubectl

[root@client ~]# yum install -y kubectl-1.16.4

安装版本与集群版本保持一致

2.配置并加载命令补全

2.1安装bash-completion

[root@client ~]# yum -y install bash-completion [root@client ~]# source /etc/profile.d/bash_completion.sh

2.2 拷贝admin.conf文件

[root@client ~]# mkdir -p /etc/kubernetes [root@client ~]# scp 192.168.1.11:/etc/kubernetes/admin.conf /etc/kubernetes/ [root@client ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile [root@client ~]# source .bash_profile

2.3 加载环境变量

[root@master01 ~]# echo "source <(kubectl completion bash)" >> ~/.bash_profile [root@master01 ~]# source .bash_profile kubectl测试 [root@client ~]# kubectl get nodes [root@client ~]# kubectl get cs [root@client ~]# kubectl get po -o wide -n kube-system

十一、搭建Dashboard服务

所有操作都在client端完成

1.下载yaml

[root@client ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta8/aio/deploy/recommended.yaml

如果连接超时,可以多试几次。recommended.yaml已上传,也可以在文末下载。

配置yaml

2.1 修改镜像地址

[root@client ~]# sed -i 's/kubernetesui/registry.cn-hangzhou.aliyuncs.com\/loong576/g' recommended.yaml

由于默认的镜像仓库网络访问不通,所以我们这里改成阿里镜像

2.2 外网访问

[root@client ~]# sed -i '/targetPort: 8443/a\ \ \ \ \ \ nodePort: 30001\n\ \ type: NodePort' recommended.yaml

配置NodePort,外部通过https://NodeIp:NodePort 访问Dashboard,此时端口为30001

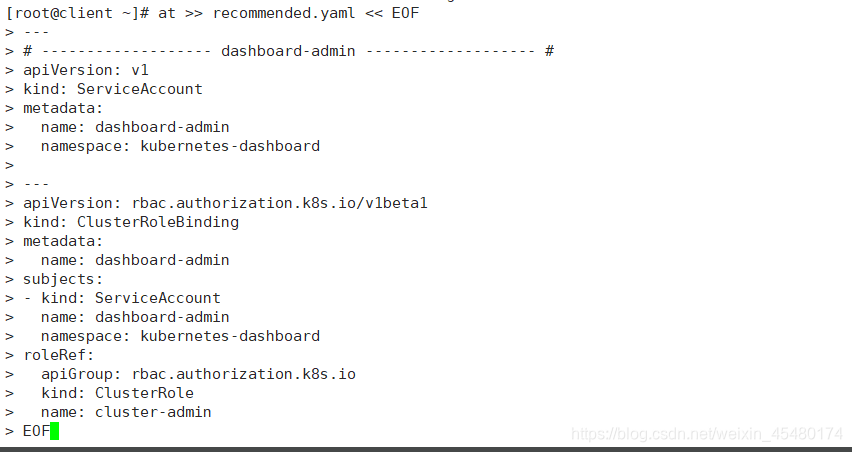

2.3 新增管理员帐号

[root@client ~]# cat >> recommended.yaml << EOF ---# ------------------- dashboard-admin ------------------- # apiVersion: v1 kind: ServiceAccount metadata: name: dashboard-admin namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: dashboard-admin subjects: - kind: ServiceAccount name: dashboard-admin namespace: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin EOF

创建超级管理员的账号用于登录Dashboard

3.部署访问

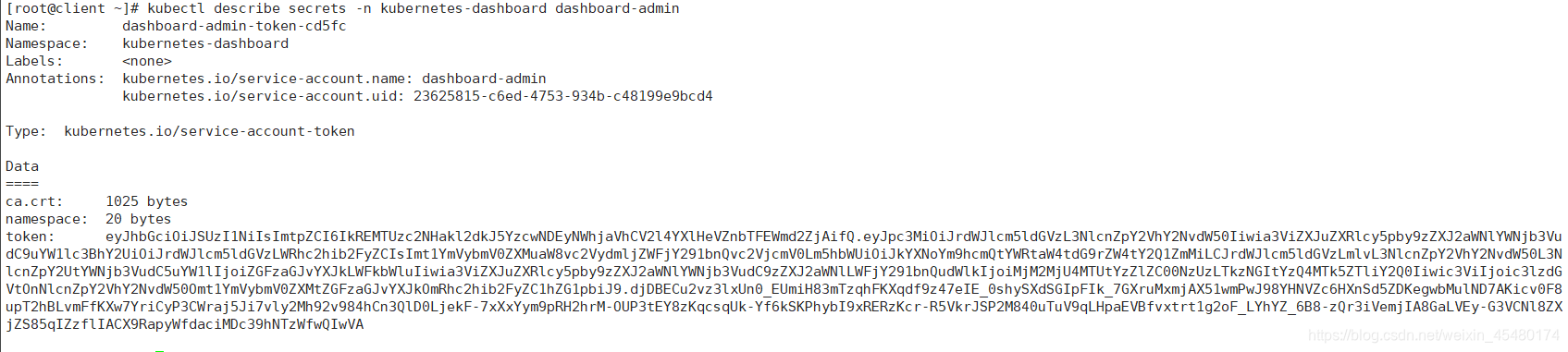

部署Dashboard [root@client ~]# kubectl apply -f recommended.yaml 查看服务状态 [root@client ~]# kubectl get all -n kubernetes-dashboard NAME READY STATUS RESTARTS AGE pod/dashboard-metrics-scraper-5f4bf8c7d8-78s87 1/1 Running 2 23h pod/kubernetes-dashboard-8478d57dc6-8xd57 1/1 Running 4 23h NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/dashboard-metrics-scraper ClusterIP 10.111.12.107 <none> 8000/TCP 23h service/kubernetes-dashboard NodePort 10.111.172.190 <none> 443:30001/TCP 23h NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/dashboard-metrics-scraper 1/1 1 1 23h deployment.apps/kubernetes-dashboard 1/1 1 1 23h NAME DESIRED CURRENT READY AGE replicaset.apps/dashboard-metrics-scraper-5f4bf8c7d8 1 1 1 23h replicaset.apps/kubernetes-dashboard-8478d57dc6 1 1 1 23h 令牌查看 [root@client ~]# kubectl describe secrets -n kubernetes-dashboard dashboard-admin

令牌为:

eyJhbGciOiJSUzI1NiIsImtpZCI6IkREMTUzc2NHakl2dkJ5YzcwNDEyNWhjaVhCV2l4YXlHeVZnbTFEWmd2ZjAifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tY2Q1ZmMiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMjM2MjU4MTUtYzZlZC00NzUzLTkzNGItYzQ4MTk5ZTliY2Q0Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmRhc2hib2FyZC1hZG1pbiJ9.djDBECu2vz3lxUn0_EUmiH83mTzqhFKXqdf9z47eIE_0shySXdSGIpFIk_7GXruMxmjAX51wmPwJ98YHNVZc6HXnSd5ZDKegwbMulND7AKicv0F8upT2hBLvmFfKXw7YriCyP3CWraj5Ji7vly2Mh92v984hCn3QlD0LjekF-7xXxYym9pRH2hrM-OUP3tEY8zKqcsqUk-Yf6kSKPhybI9xRERzKcr-R5VkrJSP2M840uTuV9qLHpaEVBfvxtrt1g2oF_LYhYZ_6B8-zQr3iVemjIA8GaLVEy-G3VCNl8ZXjZS85qIZzflIACX9RapyWfdaciMDc39hNTzWfwQIwVA

3.4 访问

使用火狐浏览器或者访问:https://VIP:30001

通过令牌方式登录

Dashboard提供了可以实现集群管理、工作负载、服务发现和负载均衡、存储、字典配置、日志视图等功能。

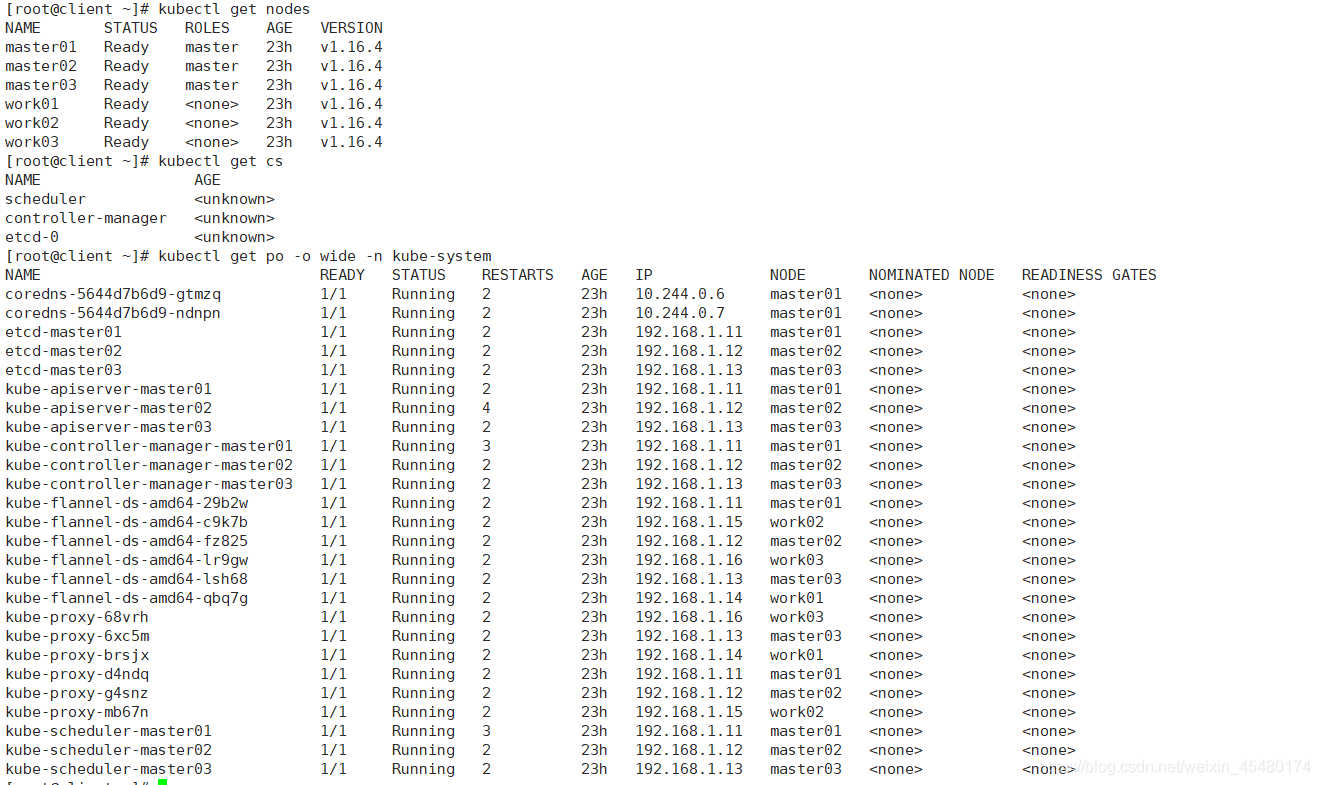

最后、测试集群高可用

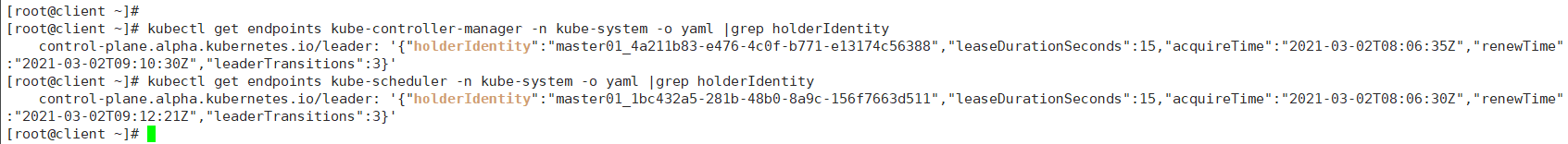

1.组件所在节点查看

首先通过ip查看apiserver所在节点,通过leader-elect查看scheduler和controller-manager所在节点:

[root@master01 ~]# ip a|grep 10

inet 192.168.1.10/32 scope global eth0

[root@client ~]# kubectl get endpoints kube-controller-manager -n kube-system -o yaml |grep holderIdentity

control-plane.alpha.kubernetes.io/leader: '{"holderIdentity":"master01_4a211b83-e476-4c0f-b771-e13174c56388","leaseDurationSeconds":15,

"acquireTime":"2021-03-02T08:06:35Z","renewTime":"2021-03-02T09:10:30Z","leaderTransitions":3}'

[root@client ~]# kubectl get endpoints kube-scheduler -n kube-system -o yaml |grep holderIdentity

control-plane.alpha.kubernetes.io/leader: '{"holderIdentity":"master01_1bc432a5-281b-48b0-8a9c-156f7663d511","leaseDurationSeconds":15,

"acquireTime":"2021-03-02T08:06:30Z","renewTime":"2021-03-02T09:12:21Z","leaderTransitions":3}'

| 组件名 | 所在节点 |

|---|---|

| apiserver | master01 |

| controller-manager | master01 |

| scheduler | master01 |

关闭master01,模拟宕机

[root@master01 ~]# init 0

2.2 各组件查看

vip浮动到master02

[root@master01 ~]# ip a|grep 10 inet 192.168.1.10/32 scope global eth0

通过查看发现controller-manager和scheduler也发生了转移

[[root@client ~]# kubectl get endpoints kube-controller-manager -n kube-system -o yaml |grep holderIdentity

control-plane.alpha.kubernetes.io/leader: '{"holderIdentity":"master02_c57afc9d-24fe-4915-b3e4-2c2fc39abd73",

"leaseDurationSeconds":15,"acquireTime":"2021-03-02T09:19:18Z","renewTime":"2021-03-02T09:19:32Z","leaderTransitions":4}'

[root@client ~]# kubectl get endpoints kube-scheduler -n kube-system -o yaml |grep holderIdentity

control-plane.alpha.kubernetes.io/leader: '{"holderIdentity":"master02_730fa0fd-94d4-4b6f-b75a-be688109c19c","leaseDurationSeconds":15,

"acquireTime":"2021-03-02T09:19:17Z","renewTime":"2021-03-02T09:19:37Z","leaderTransitions":4}'

| 通过查看组件名 | 所在节点 |

|---|---|

| apiserver | master02 |

| controller-manager | master02 |

| scheduler | master02 |

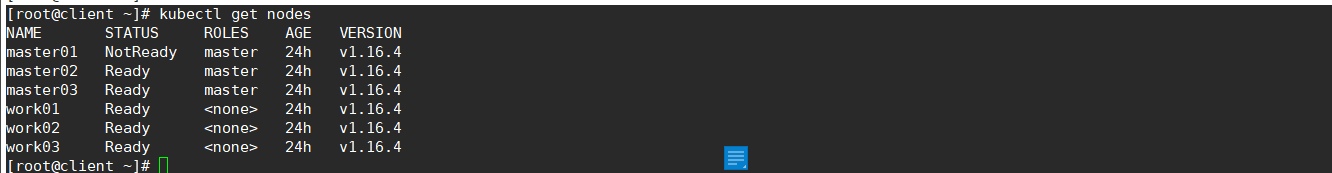

2.集群功能性测试

[root@client ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master01 NotReady master 24h v1.16.4 master02 Ready master 24h v1.16.4 master03 Ready master 24h v1.16.4 work01 Ready <none> 24h v1.16.4 work02 Ready <none> 24h v1.16.4 work03 Ready <none> 24h v1.16.4

master01状态为NotReady

创建pod:

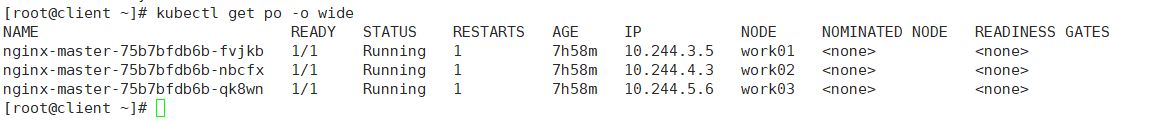

[root@client ~]# more nginx-master.yaml apiVersion: apps/v1 #描述文件遵循extensions/v1beta1版本的Kubernetes API kind: Deployment #创建资源类型为Deployment metadata: #该资源元数据 name: nginx-master #Deployment名称 spec: #Deployment的规格说明 selector: matchLabels: app: nginx replicas: 3 #指定副本数为3 template: #定义Pod的模板 metadata: #定义Pod的元数据 labels: #定义label(标签) app: nginx #label的key和value分别为app和nginx spec: #Pod的规格说明 containers: - name: nginx #容器的名称 image: nginx:latest #创建容器所使用的镜像 [root@client ~]# kubectl apply -f nginx-master.yaml deployment.apps/nginx-master created [root@client ~]# kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-master-75b7bfdb6b-fvjkb 1/1 Running 1 7h58m 10.244.3.5 work01 <none> <none> nginx-master-75b7bfdb6b-nbcfx 1/1 Running 1 7h58m 10.244.4.3 work02 <none> <none> nginx-master-75b7bfdb6b-qk8wn 1/1 Running 1 7h58m 10.244.5.6 work03 <none> <none>

关闭master02: [root@master02 ~]# init 0 查看VIP: [root@master03 ~]# ip a|grep 10 inet 192.168.1.10/32 scope global eth0 vip浮动至唯一的控制节点:master03 集群功能测试 [root@client ~]# kubectl get nodes Error from server: etcdserver: request timed out [root@client ~]# kubectl get nodes Unable to connect to the server: dial tcp 192.168.1.10:6443: connect: no route to host

通过以上测试可以得出结论,当有一个控制节点宕机时,VIP会发生转移,集群各项功能不受影响。但当master02关机后etcd集群崩溃,整个k8s集群也不能正常对外服务。

以上就是本次k8s手动搭建过程,希望对大家有所帮助,如果有阿里云使用过程中问题或者购买指导都可以私信或评论。多谢大家的支持